The Robot that Learns

By David Goddard. Photography by Randall Brown.

Hearing that a project in the Min H. Kao Department of Electrical Engineering and Computer Science involves neon doesn’t sound out of the ordinary at first blush, perhaps evoking images of Times Square, Piccadilly Circus, and the signs of yesteryear.

But this neon comes from the computer science side of the department and has more to do with the future of artificial intelligence and robotics.

The project—officially titled NeoN: Neuromorphic Control for Autonomous Robotic Navigation—aims to build a navigation system that uses neuromorphic control.

Graduate student Parker Mitchell and undergraduate Grant Bruer teamed up with ORNL’s Katie Schuman (’15, PhD, CompSci) to serve as leads on the project.

As opposed to programmed control, where the operator designs a framework telling the machine what to do, neuromorphic control allows the machine to learn independently by running through several different computer scenarios.

In this case, the robot was asked to navigate around a series of obstacles placed on the floor of a room, the idea being that a machine could be taught to learn from its mistakes.

Each scenario presented the robot with hundreds of thousands of maneuvers to choose from. As Bruer points out, finding the optimal path would have taken prohibitively long in a physical environment, so the team was forced to find a virtual solution.

Thanks to Schuman’s connections at the lab, the answer was Titan, ORNL’s room-sized supercomputer.

“We used Titan to run different scenarios through the brain, keeping the ones that worked and ‘killing off’ the ones that didn’t,” said Mitchell. “It’s the neuromorphic equivalent of survival of the fittest.”

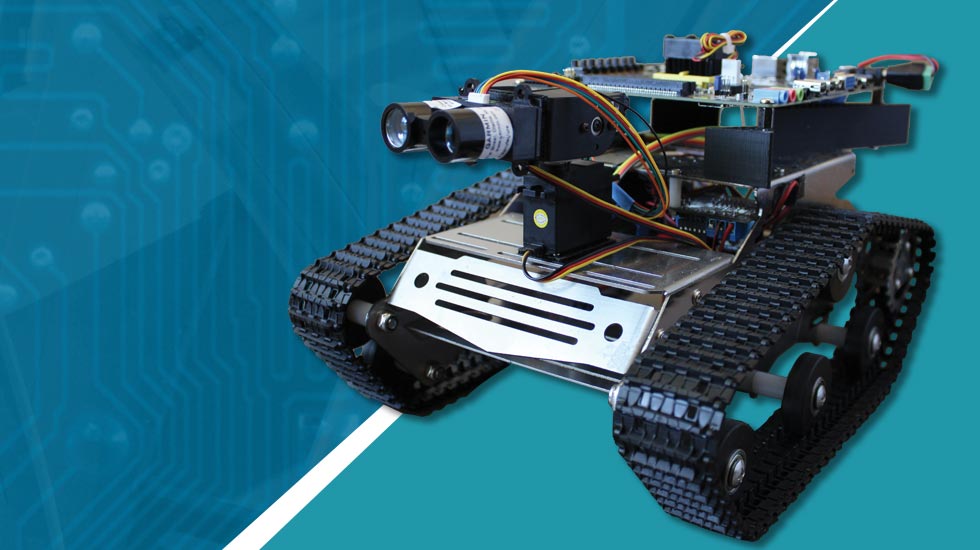

From there, the team paired the best performing maneuvers from the simulation with Lidar to help give the machine “eyes.”

Lidar uses light to detect objects in much the same way as radar uses radio waves and sonar uses sound.

It allows the robot’s brain to recognize objects in its path and react to them in the real world the same way it learned to in the virtual world via the simulations, giving the devices a certain level of autonomy.

“The idea is to cover as much of an area as possible while not hitting things,” said Schuman. “It first learns to avoid stationary objects and then adapts to avoid moving obstacles.”

Schuman pointed out that applications for the project include everything from search and rescue operations to any situation that might be too dangerous for humans.

The team’s work could also be applied to drones, further aiding those types of situations.

The idea came out of the department’s TENNLab—Neuromorphic Architectures, Learning, Applications—where Mitchell, Bruer, and Schuman are members, along with UT’s John Fisher Distinguished Professor Mark Dean, Professor James Plank, and Associate Professor Garrett Rose.

All of those TENNLab members helped advise and offer input on NeoN, with nearly two dozen students in the department helping design the body.